Computer security for both industry and consumers focuses on protecting systems, data, and communications from a growing range of cyber threats, including malware, hacking, data breaches, fake media, and identity theft. These threats not only compromise sensitive information but also erode trust in digital interactions.

For industries, cybersecurity measures emphasize regulatory compliance, network segmentation, threat intelligence, and advanced threat detection systems. Organisations must also safeguard against deepfake technology, which can be used for corporate fraud, and ransomware attacks that cripple critical infrastructure.

Consumers, on the other hand, face increasing risks from phishing scams,financial fraud, and social engineering attacks. Identity theft, often facilitated by data breaches and leaked personal information, enables cybercriminals to commit fraud in victims’ names. Fake media, including AI-generated misinformation, contributes to deception, influencing public perception and decision-making. To mitigate these threats, individuals must adopt security measures such as multi-factor authentication, secure browsing practices, password managers, and identity monitoring services.

A good starting point is the video from the UK Police about common cyber threats. It’s just an ‘overhead’ video, but it is very useful.

Lecture 20.1 from Stanford starts with some attacks made on computer systems in the past. Then continue with Lecture 20.2, Lecture 21.1, Lecture 21.2, Lecture 22.1, Lecture 22.2, Lecture 23.1, Lecture 23.2, and the lecture series continues on to more specialist topics.

The title sets an interesting question, and the answer is designed to shock. According to an annual table published by a security company a simple eight-character password can be cracked in only 37 seconds using brute force.

I don’t doubt that this could be true, but…

The article also points out that even if a password is weak, websites usually have security features to prevent hacking using brute force, like limiting the number of trials.

Also many portals use an additional layer of security such as two-factor authentication to prevent fraud.

The table is freely available on the Web, as are tables for previous years. And here I have a question. Comparing table for 2024 and 2020 it appears that it is harder to crack passwords now than four years ago. Using brut force to crack a password of 10 numbers was instantaneous in 2020 but today would take 1 hour, and a password of 15 numbers would take 6 hours in 2020 but today would take 12 years. So my question is why is it harder now than four years ago?

I can’t get my head around a situation where in 2020 it would take a hacker 9 months to brute force break a password of 18 numbers, but in 2024 it would take 11,000 years!

How secure is my password? tests the strength of passwords. I tried it with some fake passwords. I found that a random six numbers would take 25 microseconds, and a random 15 numbers would take 6 hours. Looks reasonable, even it would be a really stupid system that would allow a hacker to try passwords for 6 hours. What intrigued me was finding the password 111111111111111 also took 6 hours. This suggests to me that our hacker is not really trying to optimise his hack strategy. Surly, if you were a hacker you would try some ‘stupid number sequences” first.

I also found it odd that a simple 2 number password (11) would take 2 nanoseconds to break (111 would take 24 nanoseconds), but a 4 to 8 number password (1111 through to 11111111) was instantaneous. Why is it faster to break a larger password? Also a password aaa (or AAA) would take 400 nanoseconds, whereas aaaa was instantaneous, yet AAAA would take 11 microseconds. Why?

Whilst frequent password changes were previously advised, experts now emphasise creating strong, unique passwords and sticking with them unless they are compromised. This approach is considered more effective than frequent modifications, which can lead to weaker passwords and reusing similar ones.

But the key message is that if you are not careful, one day someone will try to attack your computer, bank accounts, etc., and if you are not careful they will succeed.

I first saw this mentioned on the FT, but this free link is just as complete “Arup employee falls victim to US$25 million deepfake video call scam“.

Engineering firm Arup has confirmed that one of its employees in Hong Kong fell victim to a deepfake video call that led them to transfer HK$200 million (US$25.6 million) of the company’s money to criminals. It looks like someone was convinced that they were talking to companies UK-based chief financial officer (CFO) by video conference. It was a hyper-realistic video, audio, etc. generated by AI. “He” asked that the Hong Kong office make 15 “confidential transactions” to five different H-K bank accounts. The scam was detected when they did a follow-up with head office.

Another attempt in a different company using a voice clone and YouTube footage for a video meeting failed.

The “Sift” strategy is a technique for spotting fake news and misleading social media posts.

More misinformation seems to be shared by individuals than by bots, and one study found that just 15% of news sharers spread up to 40% of fake news.

So what is “Sift”?

S is for Stop. Don’t share, don’t comment. I is for Investigate. Check who created the post. Use reputable websites, fact-checkers, or just Wikipedia. Ask if the source could be biased, or if they are trying to promote or sell something. F is for Find. Look for other sources of information, use a fact checking engine, try to find credible sources also reporting on the same issue. T is for Trace. Find where the claim or news came from originally. Credible media outlets can also fall into a trap.

My own take on this is instead of sharing then thinking, just don’t think and don’t share. Only share when you have had time to verify, when you feel confident in the post or news item, and when you can add something, even if it’s only a personal comment or opinion. We all know that a piece of stupid fake news can be a fun item to one friend, but be destructive and destabilising to another friend.

Don’t think, don’t share, and then verify, think, edit, comment, and share selectively.

The Chinese “911 S5” botnet, a network of malware-infected computers in nearly 200 countries, was “likely the world’s largest”. It looks like it included 19 million Windows computers.

This article “Man pleads guilty to stealing former coworker’s identity for 30 years“, described how someone used a coworkers identity to commit crimes and rack up debt. In addition the victim was incarcerated after the thief accused the victim of identity theft and the police failed to detect who was who.

This article focuses on business phone systems while also addressing the broader issue of caller ID spoofing scams. In 2022, fraudulent phone calls affected over 70 million Americans, resulting in nearly $40 billion in financial losses, according to industry reports. This is an important topic and I’ve tried to cover it in detail.

How Caller ID Spoofing Works

Scammers exploit VoIP (Voice over Internet Protocol) and SS7 (Signalling System No. 7) vulnerabilities to forge caller IDs, making it appear as though they are calling from a legitimate business or government entity. The goal of these fake caller ID scams is typically to deceive victims into revealing sensitive information, transferring funds, or granting remote access to their devices.

Common Types of Caller ID Fraud

1. Tech Support Scams

A prevalent form of caller ID spoofing, tech support scams involve fraudsters impersonating well-known technology companies such as Microsoft, Apple, or Dell. The scam typically unfolds as follows:

- The scammer claims that the victim’s computer is infected with malware or has a critical issue.

- They request remote access to “fix” the problem using tools such as AnyDesk, TeamViewer, or LogMeIn.

- Once access is granted, they may install malicious software, steal financial data, or demand payment for unnecessary “repairs.”

2. Financial and Credit Card Fraud

Caller ID spoofing is frequently used in financial scams where fraudsters impersonate banks, credit card issuers, or payment services (e.g., PayPal, Venmo).

- Social Engineering Tactics: Scammers may claim that the victim’s account has been compromised or offer a phony interest rate reduction to extract credit card numbers, CVVs, or banking credentials.

- Some fraudsters also bypass two-factor authentication (2FA) by coercing victims into providing one-time passcodes.

3. Government Agency Impersonation

Fraudsters frequently spoof official government numbers, appearing as if they are calling from agencies such as the IRS, Social Security Administration (SSA), or law enforcement/police. Common tactics include:

- Threatening immediate arrest for unpaid taxes or unpaid fines.

- Demanding payment in cryptocurrency, wire transfers, or gift cards, which are hard to trace.

- Asking for personal data such as Social Security numbers (SSNs), tax records, or Medicare details for identity theft.

How to Protect Yourself from Caller ID Spoofing Scams

- Verify the Caller – Hang up and contact the organisation directly using a verified phone number from their official website.

- Avoid Providing Personal Information – Legitimate companies and government agencies do not request sensitive details (e.g., SSNs, passwords, or payment) over unsolicited phone calls.

- Enable Call Filtering & Blocking Features – Use carrier-provided anti-spam solutions.

- Use Reverse Phone Lookup Tools – Services like Truecaller can help identify and block fraudulent numbers.

- Report Scams – File complaints with local authorities and/or police.

By staying informed about caller ID spoofing tactics and using modern call authentication protocols like STIR/SHAKEN, individuals and businesses can reduce their risk of falling victim to phone scams.

“Algorithmic bias” is when algorithms producing results that appear racist or sexist or displaying other forms of unfair bias. For example, an Amazon machine-learning model to score job applications, trained on historical hiring data, downgraded female applicants, or anyone who even mentioned activities associated with women (e.g. coach of women’s soccer league). Also others found that there were substantial discrepancies across skin types and the reliability with which three commercial face classifiers could determine gender in facial images. They could identify gender with high (99%) accuracy for white men, but accuracy for darker-skinned men and all women it was lower, with errors as high as 35% on darker-skinned women.

Of course, the algorithm itself is not biased, in the sense it is a mathematical object with no views about the world or fairness. The bias is something that we humans attribute to how the algorithm and its model function. The data used to train the Amazon job application classifier was drawn from the history of Amazon hiring decisions, which apparently included a bias against women.

The rest of the one-page “Technical Perspective”, was about algorithmic auditing can help reduce algorithmic bias.

But also checkout “On the Implicit Bias in Deep-Learning Algorithms“.

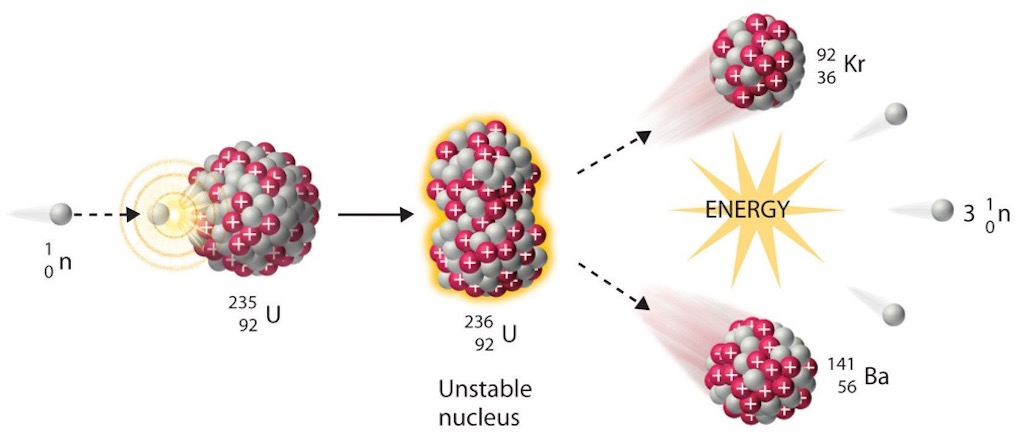

This study provides a comprehensive analysis of the ransomware ecosystem, utilizing data from the Ransomwhere platform to track Bitcoin transactions associated with ransomware attacks. The researchers identify two distinct markets within this ecosystem:-

- Commodity Ransomware: This market involves widespread, often indiscriminate attacks targeting individuals or organizations. Operators typically use a single Bitcoin address to collect payments from multiple victims, resulting in numerous transactions per address. The ransom amounts are generally lower, and the attackers employ less sophisticated methods for laundering the proceeds. They often utilize multiple smaller transactions to obfuscate the origin of funds, but their laundering strategies are relatively unsophisticated, making them more susceptible to detection and intervention.

- Ransomware as a Service (RaaS): In this model, developers create ransomware and lease it to affiliates who then carry out attacks. Each victim is assigned a unique Bitcoin address for payment, enhancing operational security and complicating tracking efforts. RaaS operations demand higher ransoms and employ advanced laundering techniques, such as rapid fund transfers to mixers and the use of fraudulent exchanges, making it challenging for law enforcement to trace and recover the illicit proceeds.

The study highlights the evolution of ransomware from opportunistic attacks to a more structured and professionalized criminal enterprise. The RaaS model, in particular, has lowered the barrier to entry for cybercriminals, leading to an increase in both the frequency and severity of attacks. The researchers emphasize the need for enhanced cybersecurity measures and international cooperation to effectively combat these evolving threats.

In 2020, Berlin-based artist Simon Weckert conducted an experiment “Google Maps Hack“, to highlight the influence of technology on our perception of reality. He placed 99 smartphones, all running Google Maps, into a small red wagon and walked it through the streets of Berlin. Google Maps interpreted the cluster of devices moving slowly as a traffic jam, leading the application to display congested roads where there were none.

The idea was to demonstrate that systems we take for granted could be manipulated.

The article looks at the basics of blockchain, its individual components, how those components fit together, and what changes might be made to solve some of the problems with blockchain technology. Always remembering that the original objective of the blockchain system was to support “an electronic payment system based on cryptographic proof instead of trust”.

Firstly, this means ensuring the anonymity of blockchain’s users. The second goal is to provide a public record or ledger of a set of transactions that cannot be altered once verified and agreed to. The final core goal is for the system to be independent of any central or trusted authority.

While blockchain was originally proposed as a mechanism for trustless digital currency, the proposed uses have expanded well beyond that particular use case. Indeed, the emphasis seems to have bifurcated into companies that emphasize the original use for currency (thus the explosion of initial coin offerings, which create new currencies) and the use of the ledger as a general mechanism for recording and ordering transactions.

The article looks at the components and some of the problems encountered at the time. However, for me the really valuable idea is the public, nonrefutable, unalterable ledger for transactions, and not the coin application.

The first thing that attracted me to this article was its headline quote from John le Carré,

When the world is destroyed, it will be destroyed not by its madmen but by the sanity of its experts and the superior ignorance of its bureaucrats.

And the authors then immediately went on to mention the Portuguese poet and writer Fernando António Nogueira Pessoa. In his life he assumed around 75 imaginary identities with the same ease that has become common in the cyber-social world. He would write poetry or prose using one identity, then criticise that writing using another identity, then defend the original writing using yet another identity.

Pessoa understood that identity costs little in the way of minting, forming, and maintaining, yet demands a high price for its timely and accurate attribution to physical agency.

Along with the low cost of minting and maintaining identities, a lack of constraints on using identities is a primary factor that facilitates adversarial innovations that rely on deception.

The authors of this article ask the question “Will it be possible to engineer a decentralised system that can enforce honest usage of identity via mutual challenges and costly consequences when challenges fail”?

For example, such a system should be able to reduce fake personæ in social engineering attacks, malware that mimics the attributes of trusted software, and Sybil attacks that use fake identities to penetrate ad hoc networks.

It’s worth recognising that there are number of physical situations where people can remain anonymous or use a short-term identity (especially in the networks that are ad hoc, hastily formed, and short lived) that remains uncoupled from a user’s physical identity and allows them to maintain a strong form of privacy control. How can this dichotomy, namely trading off privacy for transparency in identity, be reconciled? The emerging logic underlying identity (what types of behaviours are expected, stable, possible) will also be central to avoiding many novel and hitherto unseen, unanticipated, and unanalysed security problems.

The authors went on to outline their approach founded upon traditional mathematical game theory, but also inspired by several mechanisms that have evolved in biology.

The article was quite technical but I found their description of ant colonies interesting. Certain eusocial species have rather sophisticated strategies involving costly signalling and credible and non-credible threats. In ant colonies, each ant has a CHC profile (Cuticular Hydrocarbon Chemicals) in which diverse information about the ant itself and its environment can be encoded. For example, ants make use of pheromones to reinforce good paths between the nest and a food source and communicate via chemical substances to inform nestmates about these good paths. In addition to the use of pheromones for marking routes, auxiliary information is stored in an ant’s CHC profile, and includes information about diet, genetics, and common nesting materials. Thus, ants from a colony where members share a certain diet have a similar CHC profile that enables them to identify non-nest members. Since CHC profiles are thought to be impossible to fabricate (without active participation by the queen ant), their use in communication by ants is an example of costly signalling.

Nature, and its more fluid notion of identity, has evolved highly robust solutions to identity management, allowing colonies to survive even in dynamic and contested environments. Therefore, the CHC profile also suggests that a bio-inspired generalization could protect technological systems. To achieve this, several challenging problems must be worked out to ensure the essential physical properties of CHC profiles are retained in their synthesized digital counterparts. A combination of design techniques like crypto-coins (for example, M-coins) can be used to share identity information and to subject data to a variety of cryptographically guaranteed constraints (even if some work remains to ensure physically accurate constraints analogous to those involved in chemical signalling).

You are invited to read the article if you are interested to learning how the authors use a classical signalling game, a dynamic Bayesian two-player game, involving a Sender who (using a chosen identity, real or fake) signals a Receiver to act appropriately. I will conclude here with a quote from the article that might inspire readers to investigate further…

When a deceptive identity succeeds, it will be used numerous times as there is no reason to abandon it after one interaction. Moreover, it is precisely the repeated interactions that are needed to develop trust.