What I found interesting… in 2023

The UK National centre for AI has create a short primer on generative AI, the kind of system capable of generating text, images, or other media in response to prompts. So ChatGPT is in this category.

This article focuses on the fact that today’s language models are more sophisticated than ever, but they still struggle with the concept of negation.

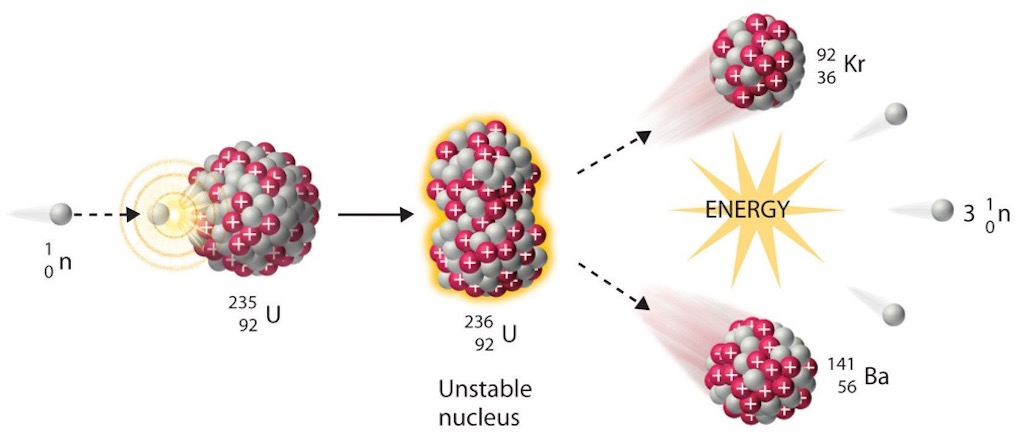

Large language models can read text and recently chatbots have improved their humanlike performances, but they still have trouble with negation. They know what it means if a bird can’t fly, but they collapse when confronted with more complicated logic involving words like ‘not’, which is trivial to a human.

As one expert noted “The problem is that the task of prediction is not equivalent to the task of understanding”. The example given was to ask a chatbot to complete the phrase “A robin is not a ____”. The chatbot might predicted ‘robin’ or ‘bird’, simply because in very many contexts, ‘robin’ and ‘bird’ co-occur very frequently.

That failure is not an accident. Negations like ‘not’, ‘never’ and ‘none’ are known as stop words, which are functional rather than descriptive. Compare them to words like ‘bird’ and ‘rat’ that have clear meanings. Stop words, in contrast, don’t add content on their own. Other examples include ‘a’, ‘the’ and ‘with’. Today models learn ‘meaning’ from mathematical weights, for example ‘rose’ appears often with ‘flower’, ‘red’ or ‘smell’. And it’s impossible to learn what “not” is this way.

Obviously systems are expected to improve over time, but the next step might simply be including the ability to reply “I don’t know”.

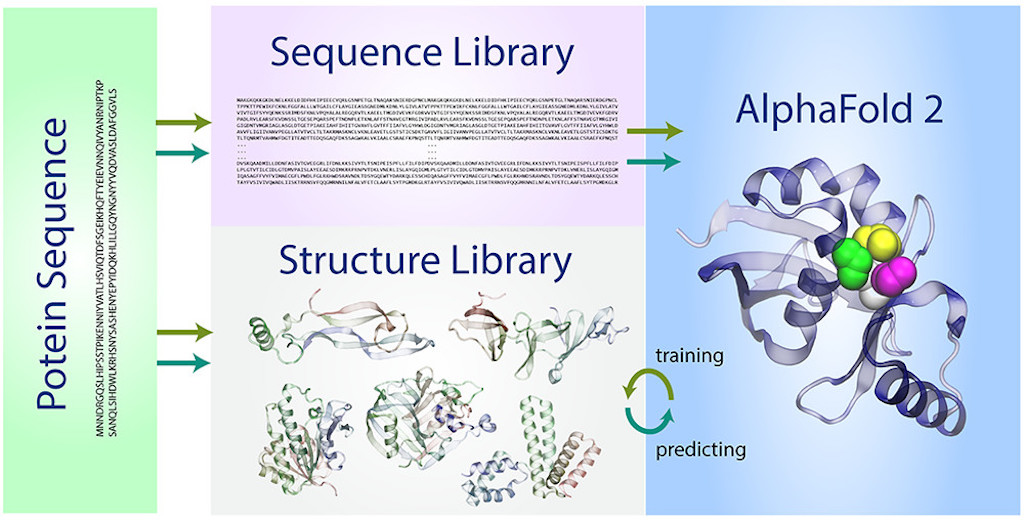

AlphaFold Spreads through Protein Science

This article from ACM Communications (May 2023) looked at the success of AlphaFold2 in uncovering the structures of key proteins from the SARS-CoV2 virus. The overall objective is to try to accelerate vaccine and drug development.

The ACM article does’t hid the complexity of the problem or the techniques used, but Google DeepMind has published a very good overview from 2020. Well worth a read.

This article from ACM Communications (May 2023) focuses on business phone systems, but highlights more generally the fake caller ID scams. In 2022, phone scams affected more than 70 million Americans to the tune of almost $40 billion in damages.

Scammers use caller ID spoofing to disguise their phone numbers. They do this to make it appear as though they are calling from a legitimate organization. The aim of fake caller ID scams is usually to trick the victim into handing over information or money.

Tech support scams are a common type of caller ID fraud. In this scenario, a scammer will call a person and claim to be from a well-known company, such as Microsoft or Apple. The scammer will say that there is a problem with the victim’s computer. They’ll then ask for remote device access or for payment to fix the issue.

Caller ID spoofing is a common tool criminals use to carry out credit card scams. Fraudsters often use social engineering to get victims to provide credit card details. For example, they’ll say they’re from a bank or credit card company, then offer to reduce the victim’s interest rate. Scammers use similar tactics to get people to hand over login credentials to online financial accounts.

Criminals also use caller ID spoofing to make it appear as if they’re calling from a government agency. For example, a scammer will call a person and tell them that they owe money to the IRS. They may then threaten legal action if the person does not pay immediately. Fraudsters may also attempt to extract personal data such as social security numbers.

It is vital to be aware of these scams and to never give sensitive information or money over the phone. Legitimate organisations will never ask for personal information or money over the phone.

This black-and-white image depicting two women of different ages won the ‘Creative Open’ category of the Sony World Photography Award. But the creator, Boris Eldagsen, refused to accept the prize because he had created the photograph using AI technology, no camera was involved.

The author of the article accepted that AI could be used to create images that humans cannot, but he felt that its infiltration into the art world is a dangerous, slippery slope.

The Ringer published an article on the way Magnus Carlsen is changing the way chess is played. For example, whilst being the undisputed best chess player in the world since 2013 he has decided not to compete in the 2023 World Chess Championship.

He has his own podcast called the “The Magnus Effect“. I wonder if he is playing on words give that the Magnus Effect is about the fact that the path taken by a spinning object passing through a fluid is different from the path taken by the same object when not spinning.

In addition to the opinions of Magnus Carlsen on chess, I want to log the below quote from the article…

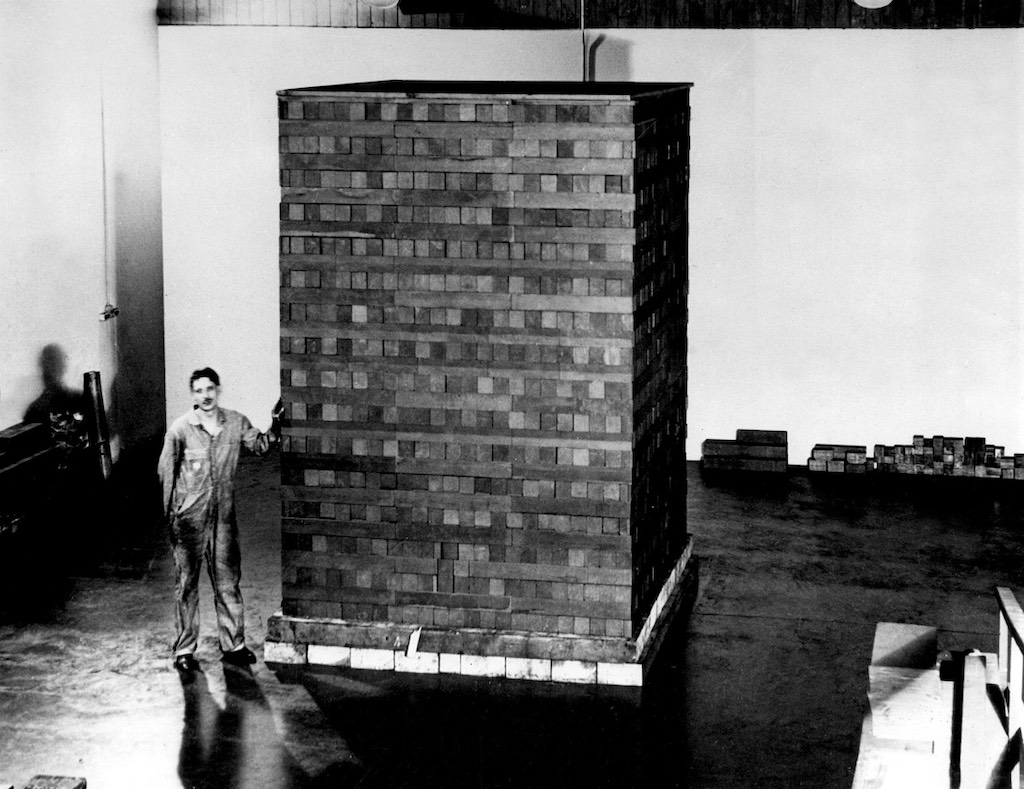

Setting aside IBM’s Deep Blue, which was designed in the mid-1990s specifically to play and defeat one person (Kasparov), the best publicly available chess engines were still only playing top players to a draw by the mid-2000s. By the mid-2010s, however, chess computers were unbeatable. In 2017, a neural network chess engine called AlphaZero took things to an otherworldly level. Programmed with nothing more than the rules of chess, AlphaZero played itself 44 million times. In 24 hours, it was strong enough to defeat the best chess computer on earth with relative ease. After getting a chance to review games played by AlphaZero in 2019, Carlsen declared himself changed. “I have become a very different player in terms of style than I was a bit earlier, and it has been a great ride”.